Microsoft's serverless is continually improving with better stability and features. The out-of-the-box integration with many services makes serverless an appealing approach when it comes to solving a problem. I was recently asked by a customer to implement a solution that copies json data posted to a Service Bus Topic into an Azure DataLake in order to run offline analytics and reporting.

At this point, I would have suggested using Azure EventGrid instead of Service Bus, since the solution needs to support multiple subscribers and it's a lot more lightweight. However, Service Bus Topics can also accommodate multiple subscribers and, besides, the customer had already committed to the technology. This is absolutely fine, since, in our role, we are asked to work within the current budget and technology constraints.

Initially, I considered using Azure Functions but then I quickly remembered that there was no output binding for Data Lakes. I could create a custom binding using the [bindings extensibility framework](https://github.com/Azure/azure-webjobs-sdk/wiki/Creating-custom-input-and-output-bindings" target="_blank), but this would require extra effort and would add further complications. Besides, our serverless platform is more than just Functions. [Azure Logic Apps](https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-overview" target="_blank) was a perfect fit for this job. With over 190 connectors, it didn’t take long to find that there were connectors for both the Service Bus and the Data Lake services. Although as a developer, I'm usually keen to write code in order to solve a problem, by now I know that if there's a better way for solving that same problem without writing any code, then I should use it. Less code means fewer chances of things going wrong like inadvertently introducing a bug or some API/SDK changing and, consequently, breaking the implementation. Using a solution like LogicApps, all I have to do is configure the right connectors and actions and the service should take advantage of the rest.

In this post, I'll explain how to setup the Logic App to listen for messages on a Service Bus Topic and then copy the data into a Data Lake folder. I'm making an assumption that you already have a Data Lake account. If not, you can create one in a few clicks.

Create Service Bus and Topics

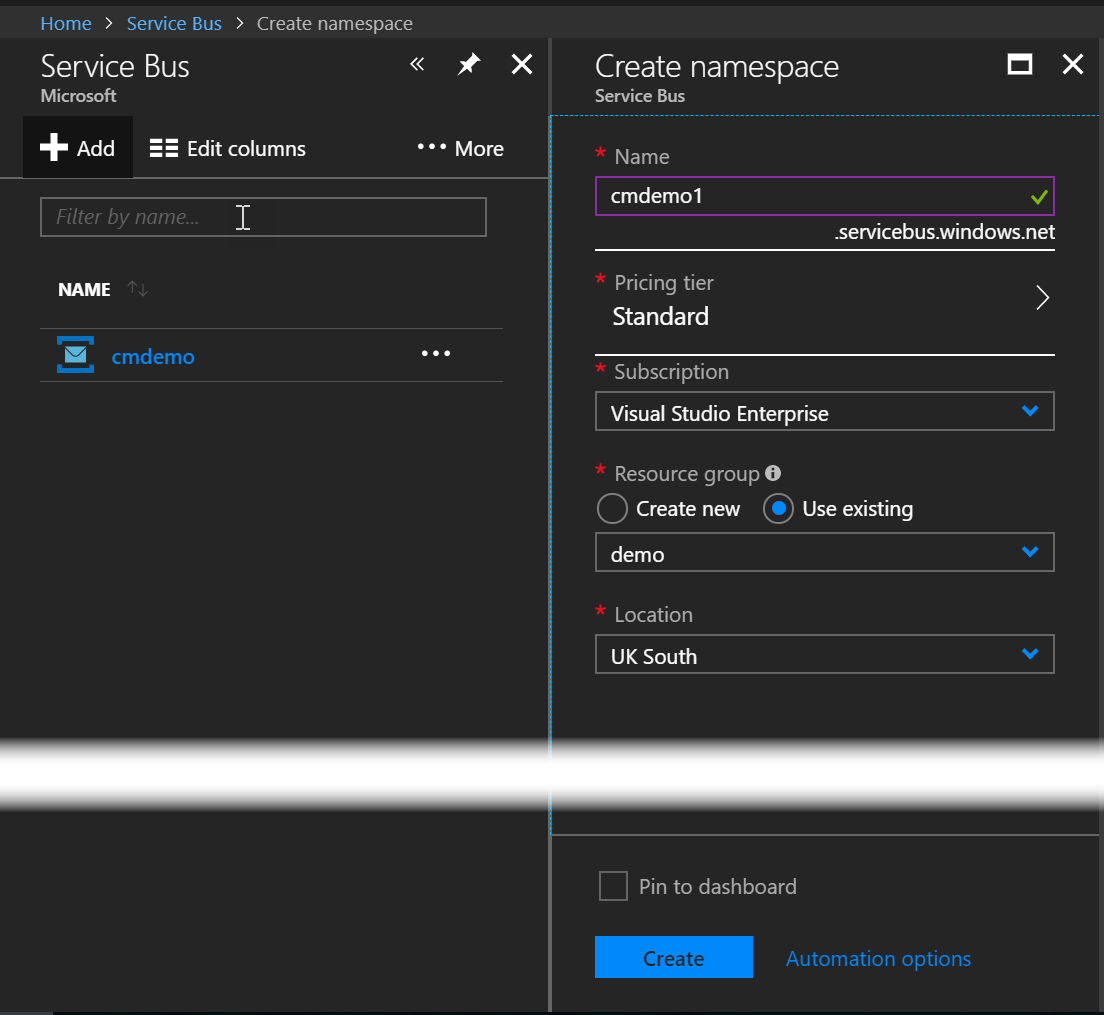

Go on to the Azure Portal and create a new Service Bus

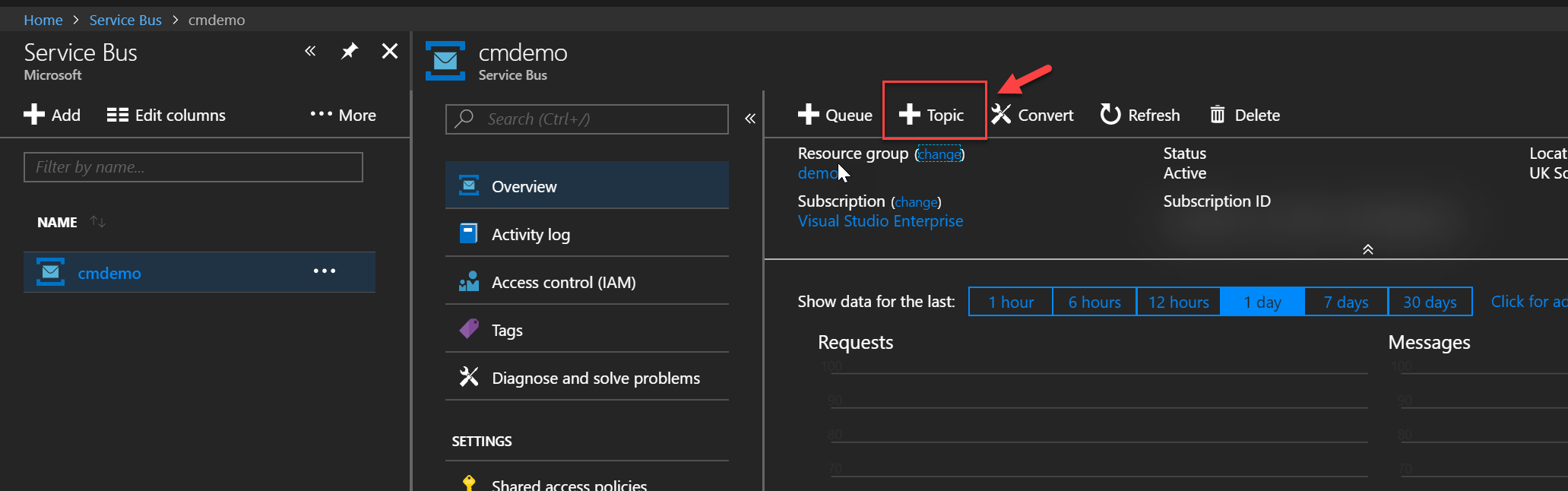

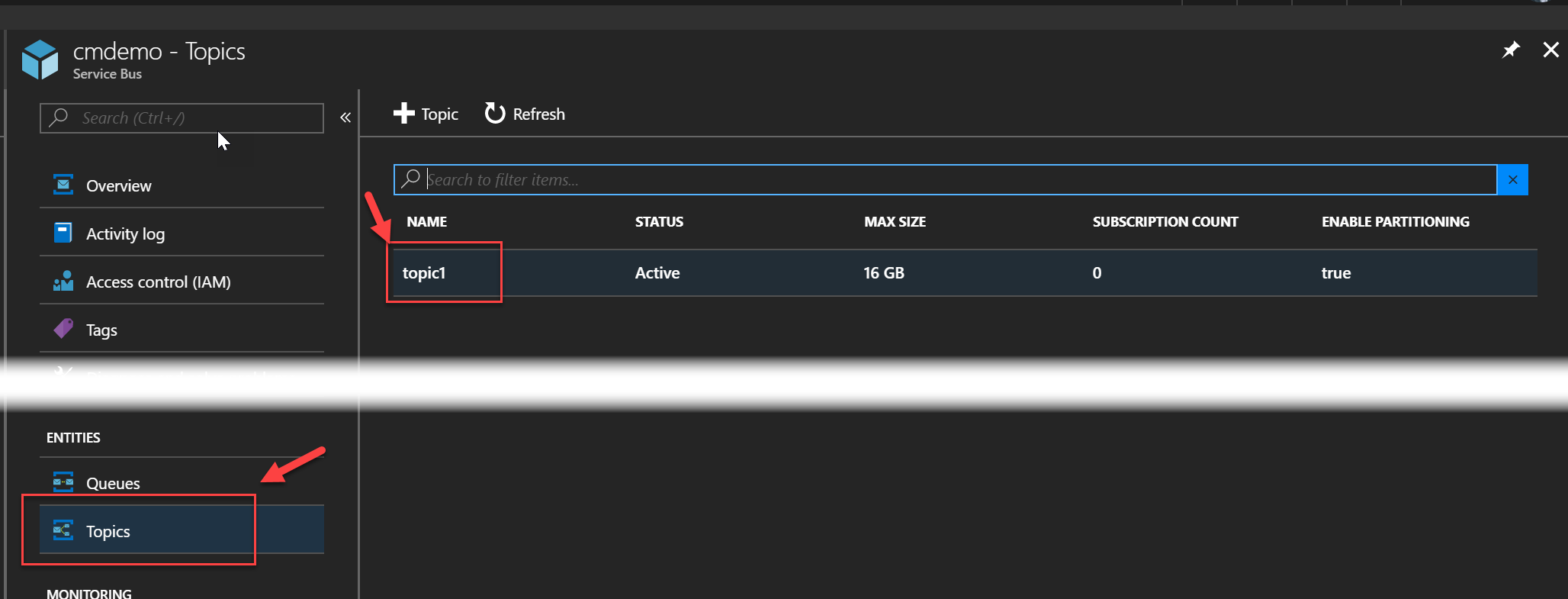

Once the Service Bus is provisioned, we need to create a new Topic

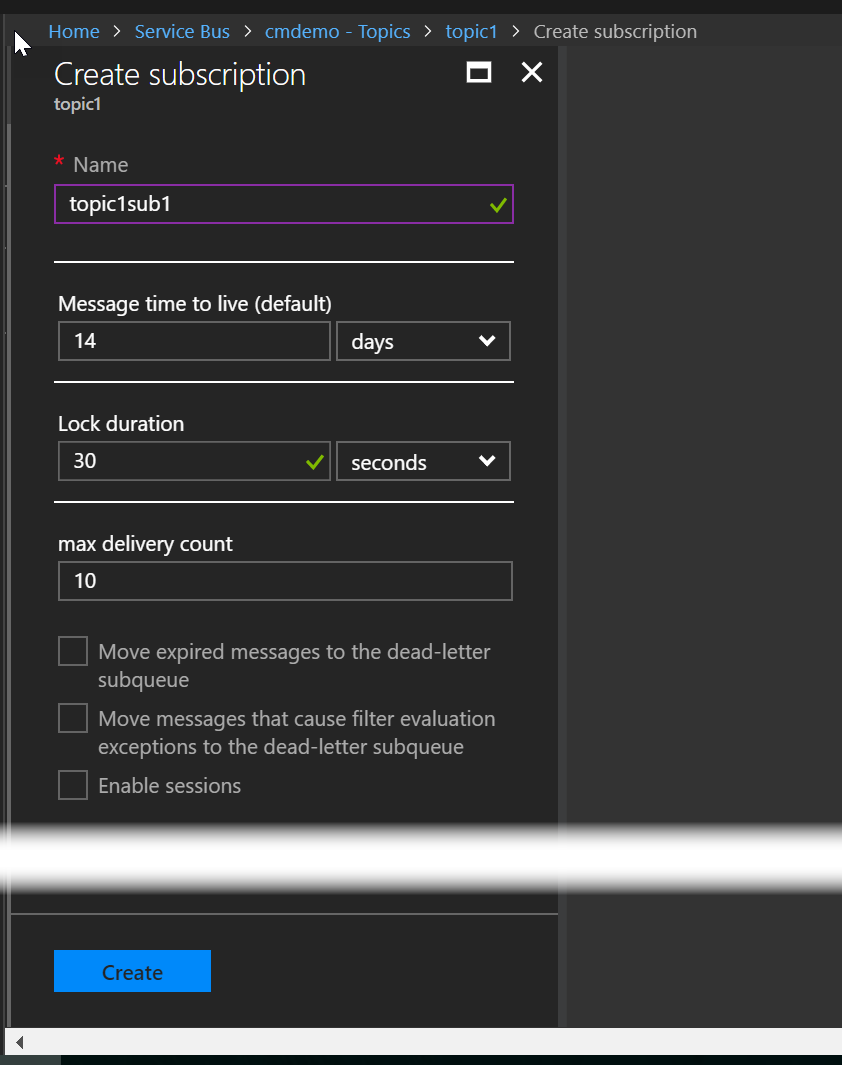

Within the topic, we need to add a subscription. This will allow us to add one or more subscribers to retrieve messages. Below you can see the steps necessary to add a subscription.

Create the LogicApp to move the data around

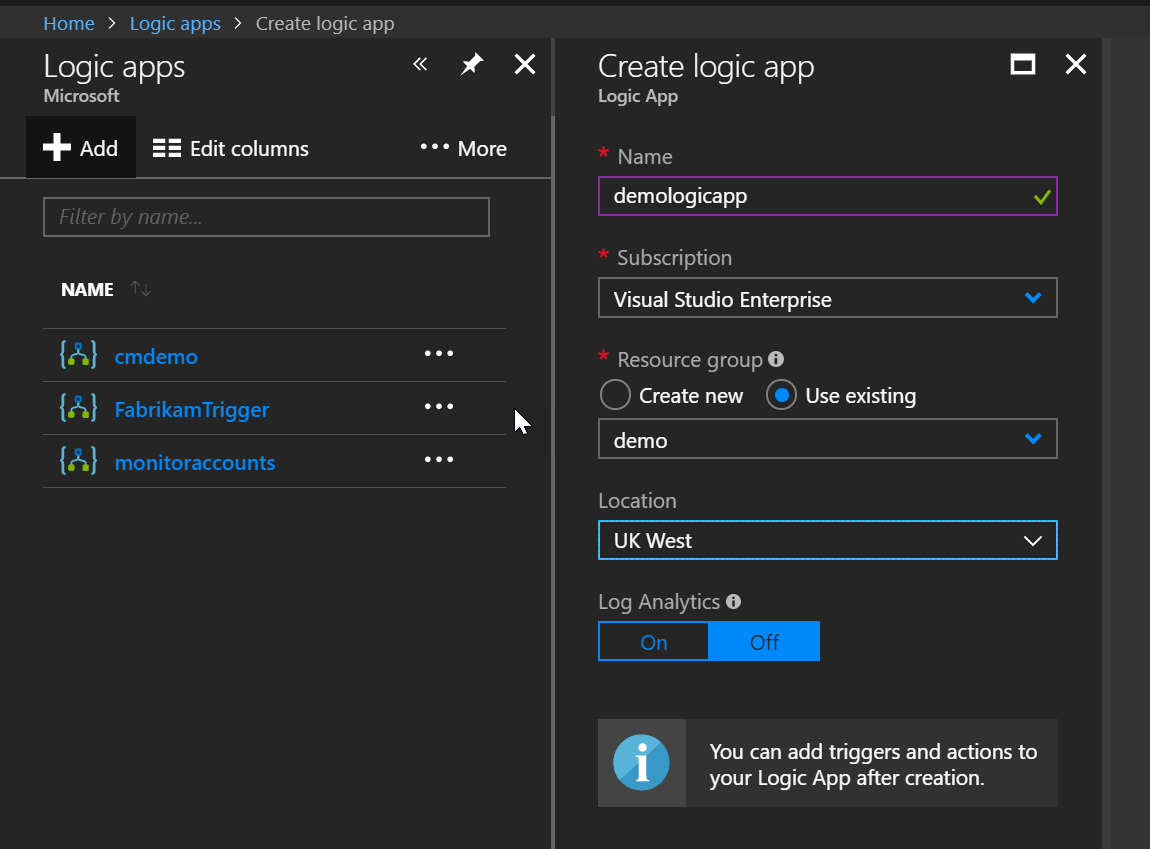

In the Azure portal, create a new Logic App

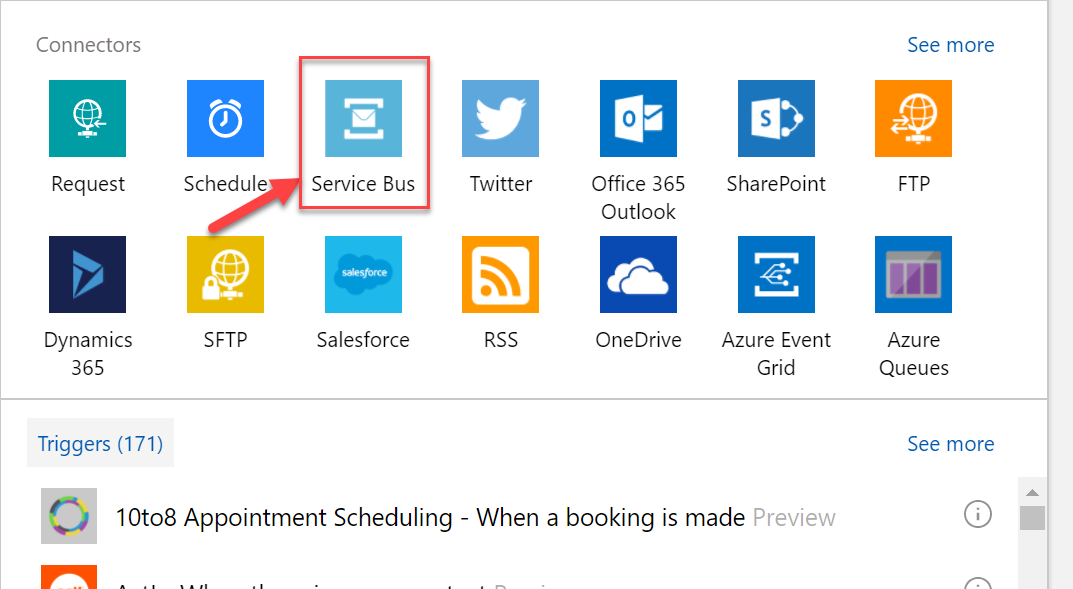

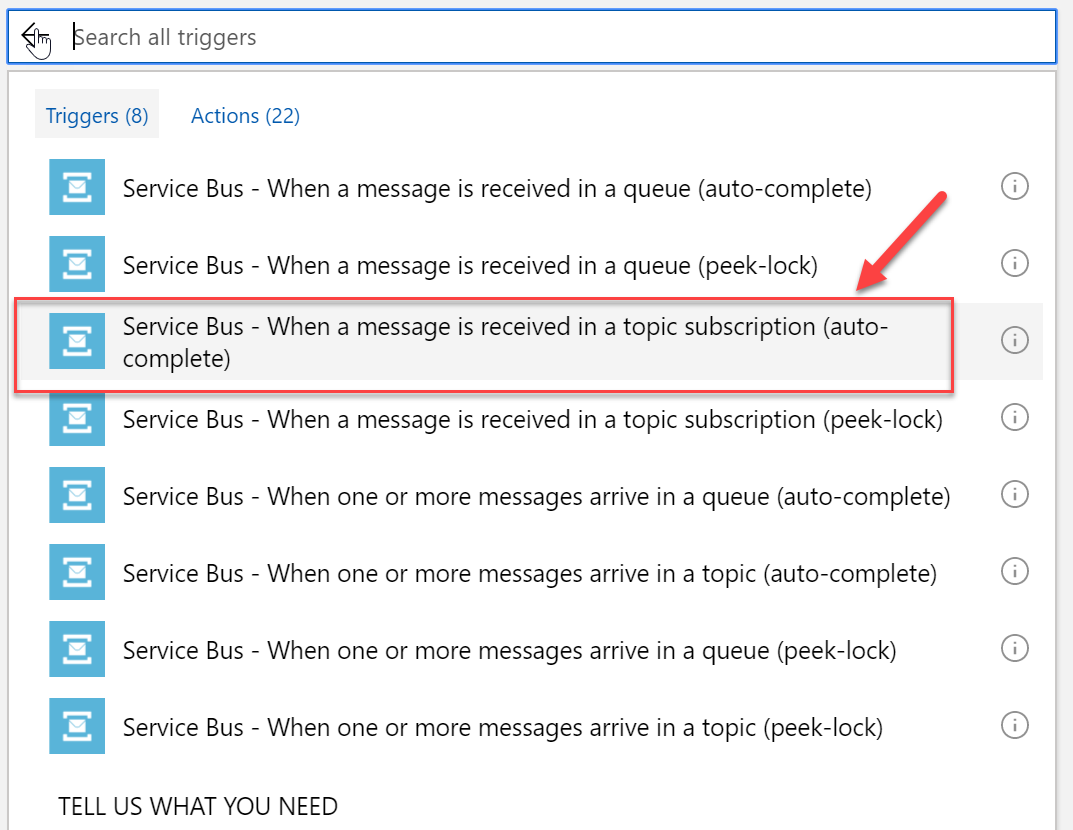

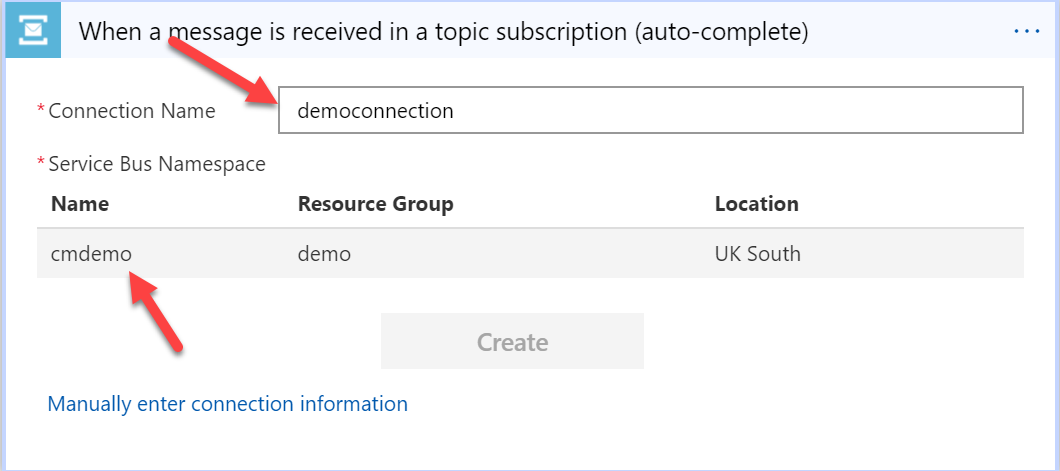

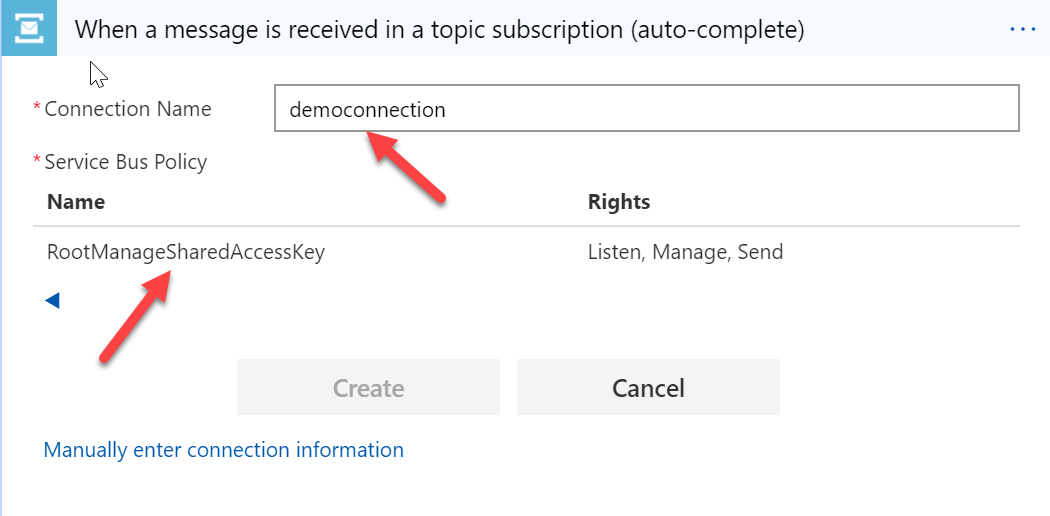

Next we need to define the connector that will listen for new messages in the Service Bus Topic we created at the previous step. The connection to the Service Bus uses the RootManageSharedAccessKey Shared Access Signature which, by default, gives too many permissions and is the equivalent of an admin key. You may want to consider using a Service Principal instead that has locked down and restricted access to Azure resources. Technically, using the RootManagerSharedAccessKey for the connection is not a problem, since the connection is stored encrypted within the service so

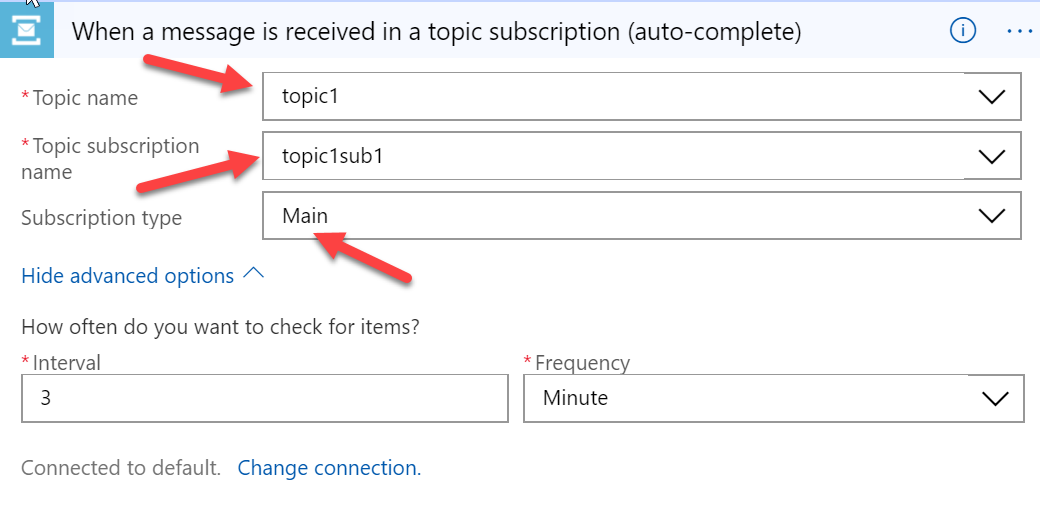

Define the right Service Bus instance and Topic

Make sure you save frequently as you progress so you don't lose your changes.

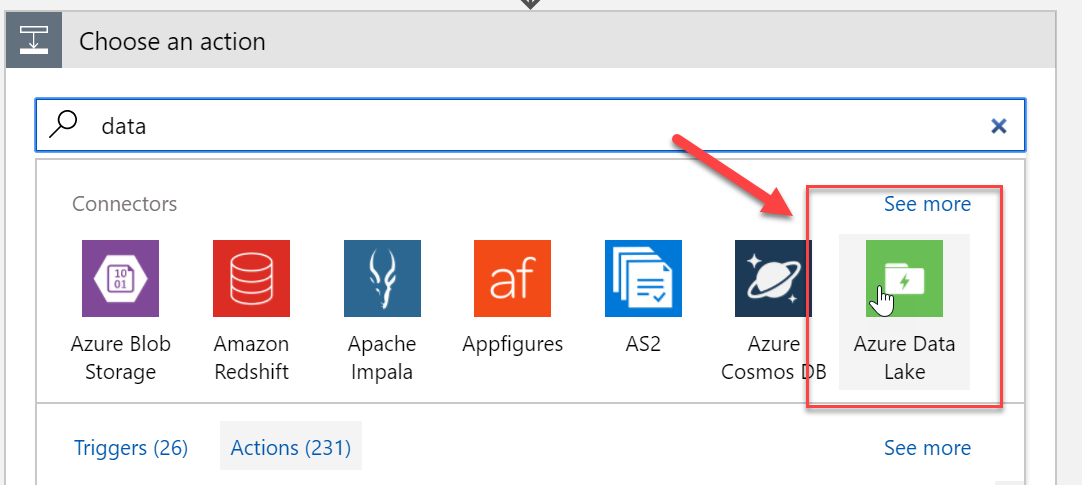

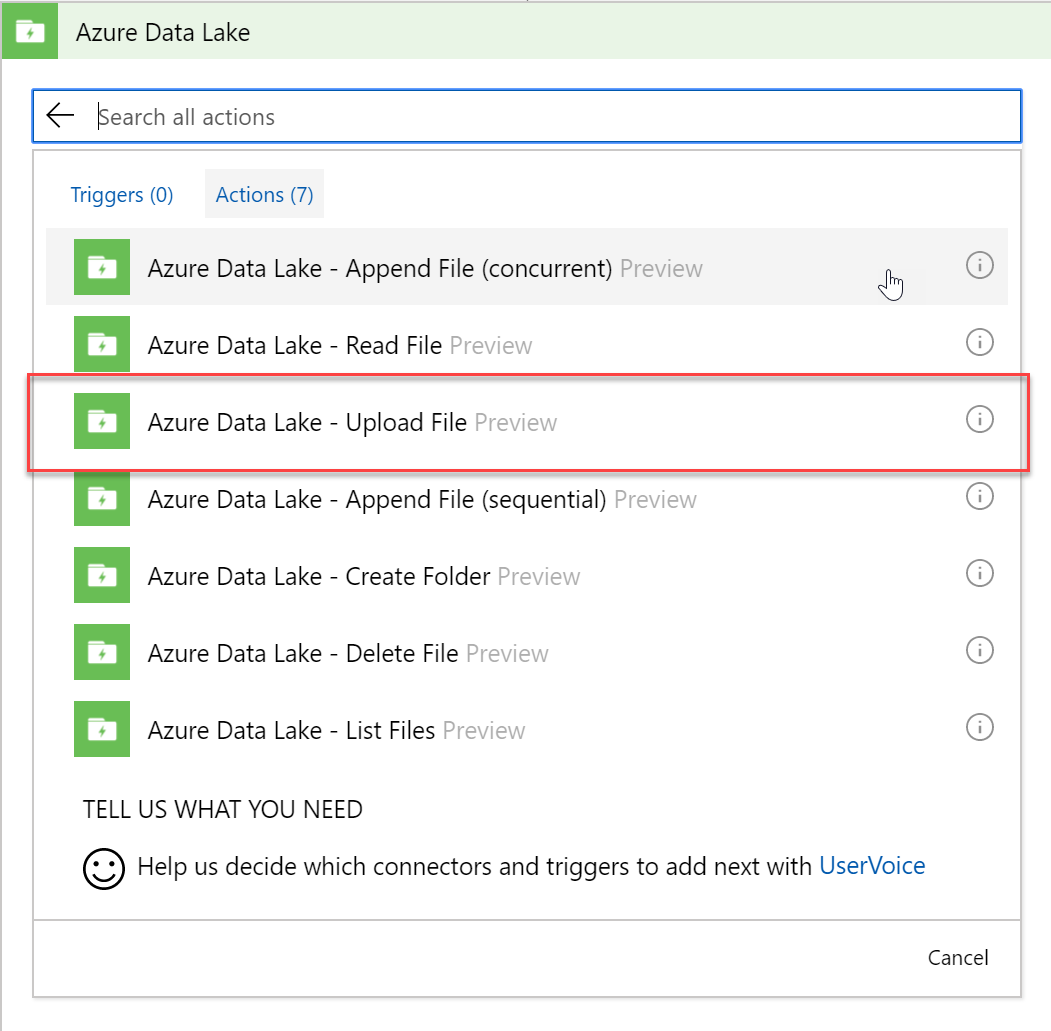

Next, we need to get the data captured from the Service Bus connector and store it directly in the Data Lake. For communicating with the Data Lake, we can again use the built-in connector. Configure the connector as per the examples below.

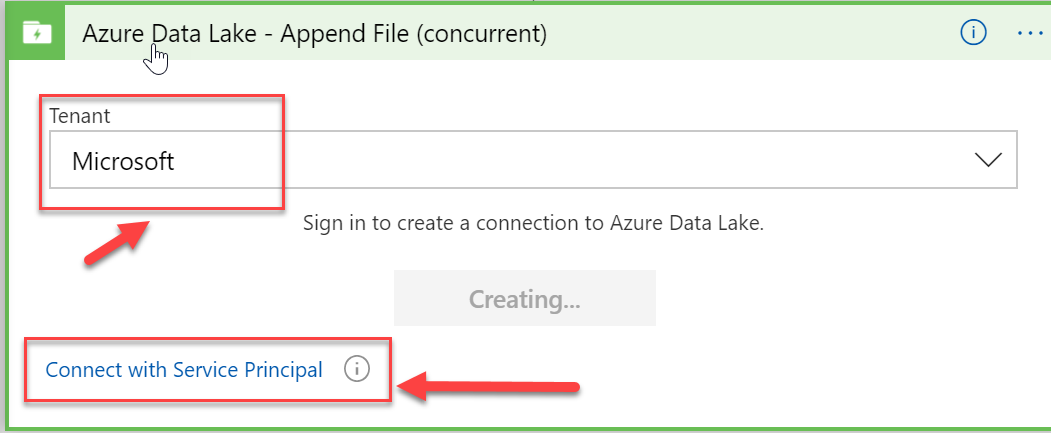

Because this is the first time we're connecting to the Data Lake account, we need to choose the right tenant and then log in. Alternatively, we can use a Service Principal for the connection credentials.

The action required for this task is Azure Data Lake - Upload file. Every new message should be stored separately as a document.

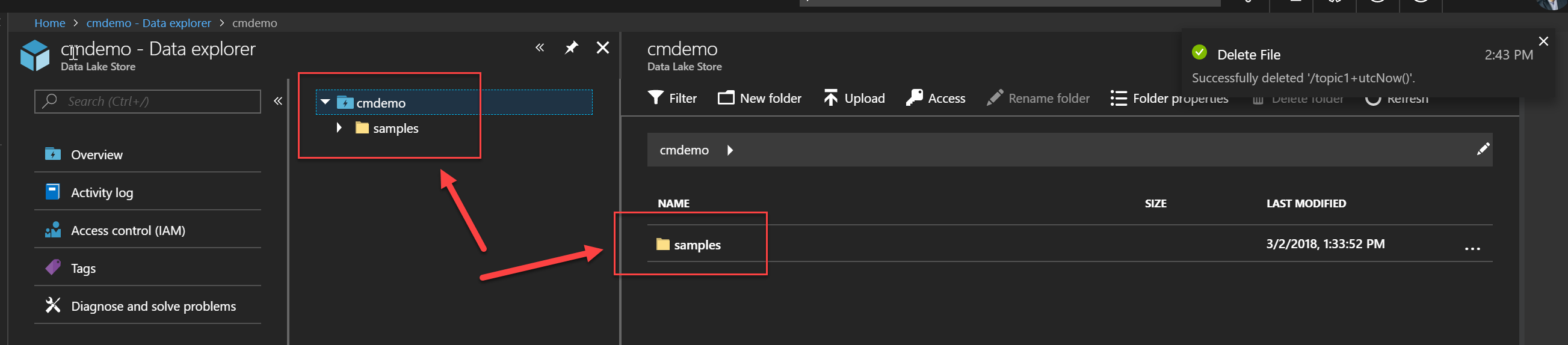

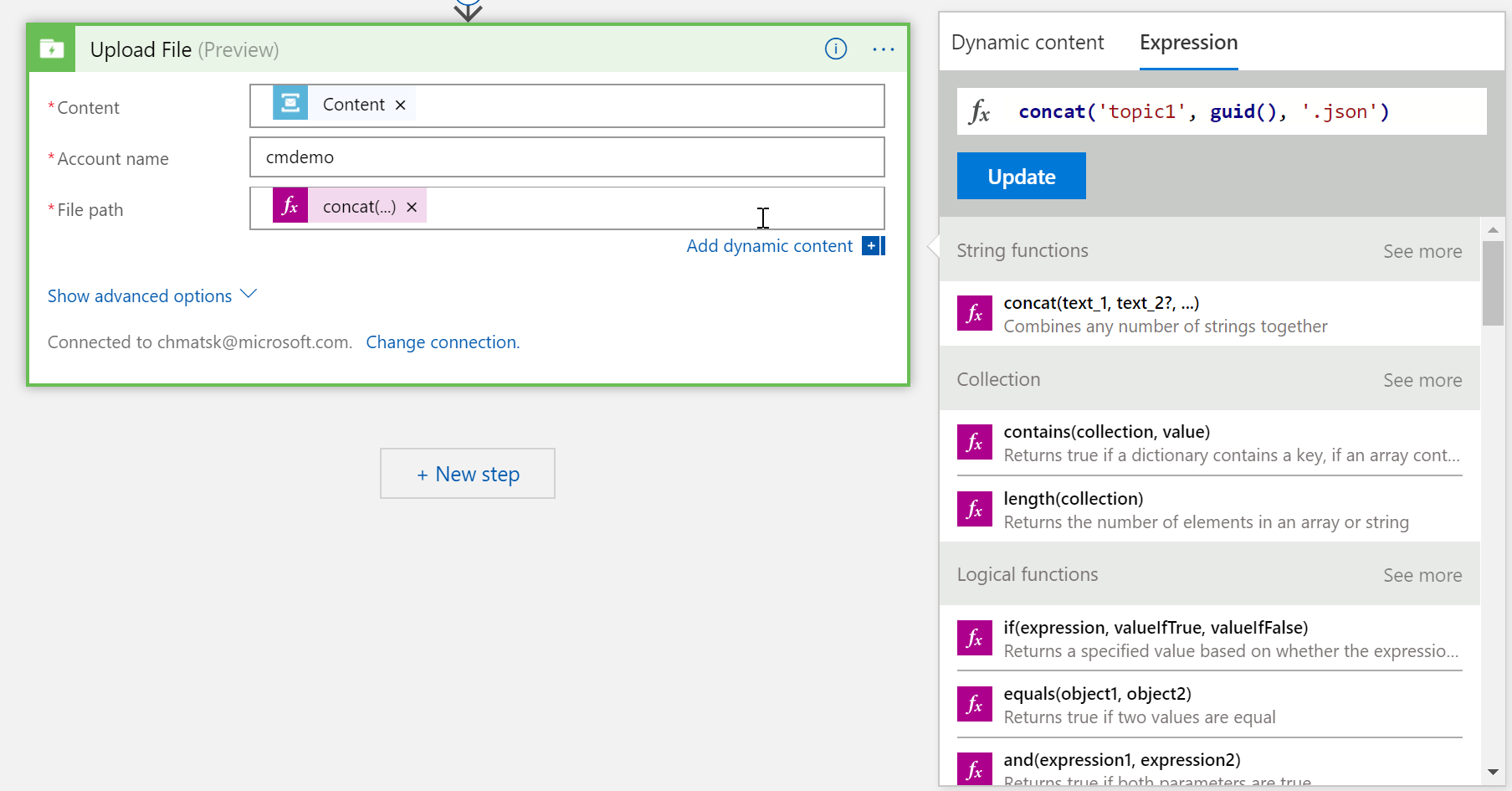

We next need to configure the right Data Lake account hierarchy and path

One important thing is to note is that I wanted the file name created to have a semi-dynamic name. For this, I took advantage of the LogicApp Expression using some of the built-in formulas. In this instance, I decided that the file name should made of the following parts topicName + Guid + .json. The expression that achieves this is:

concat('topic1_', guid(), '.json')

Save and close the LogicApp editor.

Testing the solution end-to-end

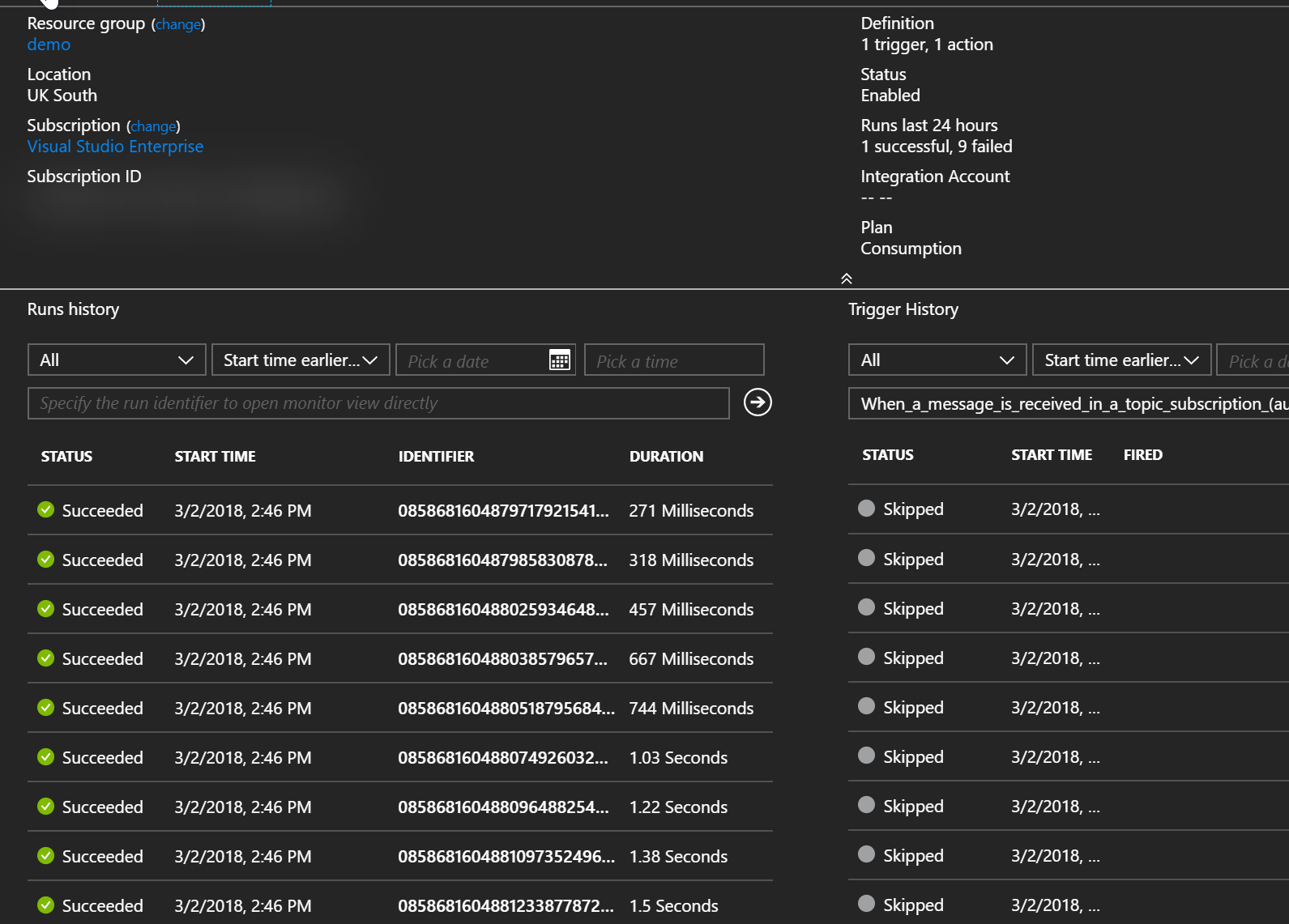

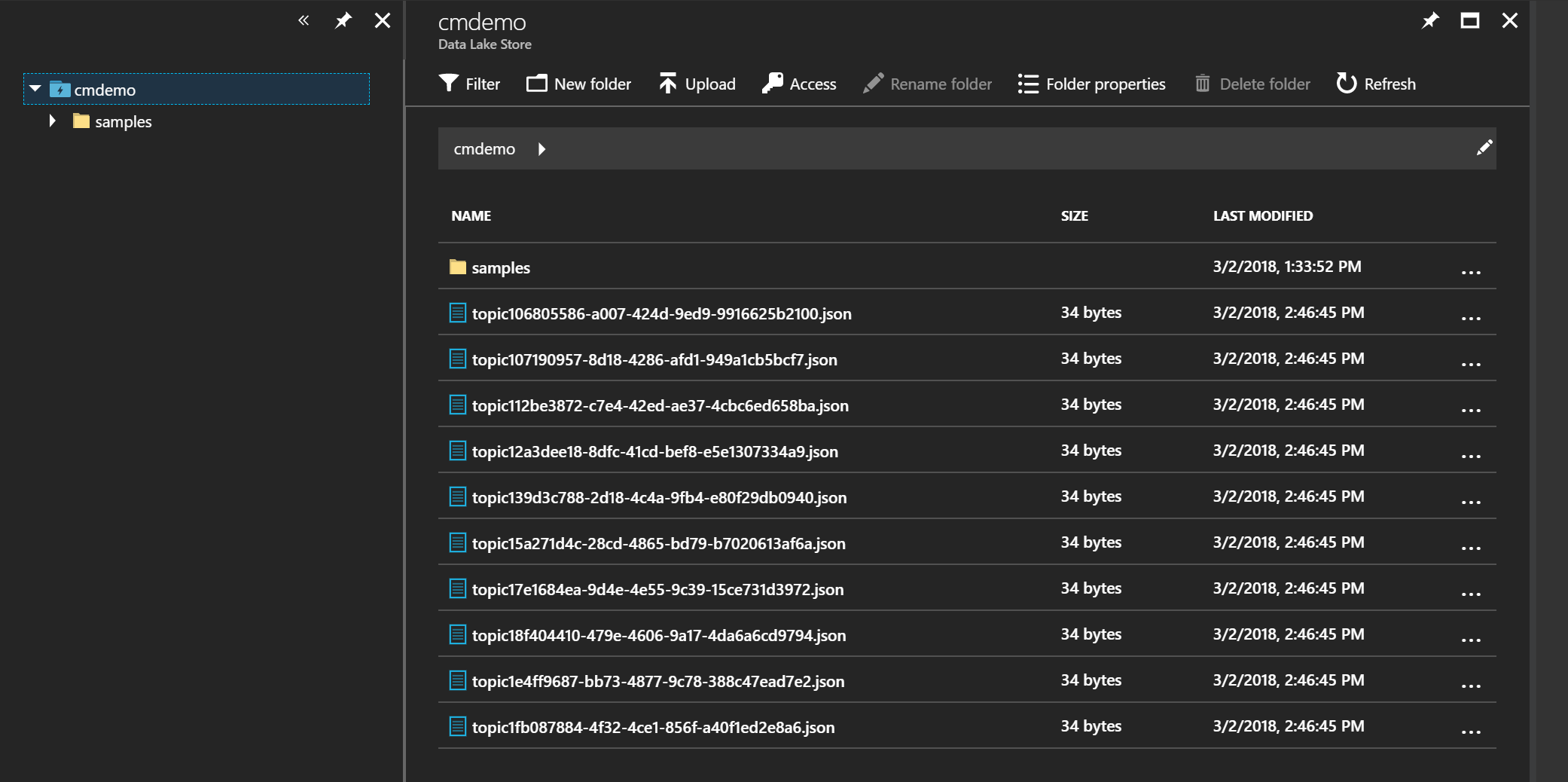

I've put together a small test harness that uploads random json messages to a Service Bus. You can find it on [GitHub](https://github.com/cmatskas/ServiceBusHarness" target="_blank). Running this generates 10 messages at a time. So after the first run, I could see the messages processed by out LogicApp and then the corresponding records created in the Data Lake

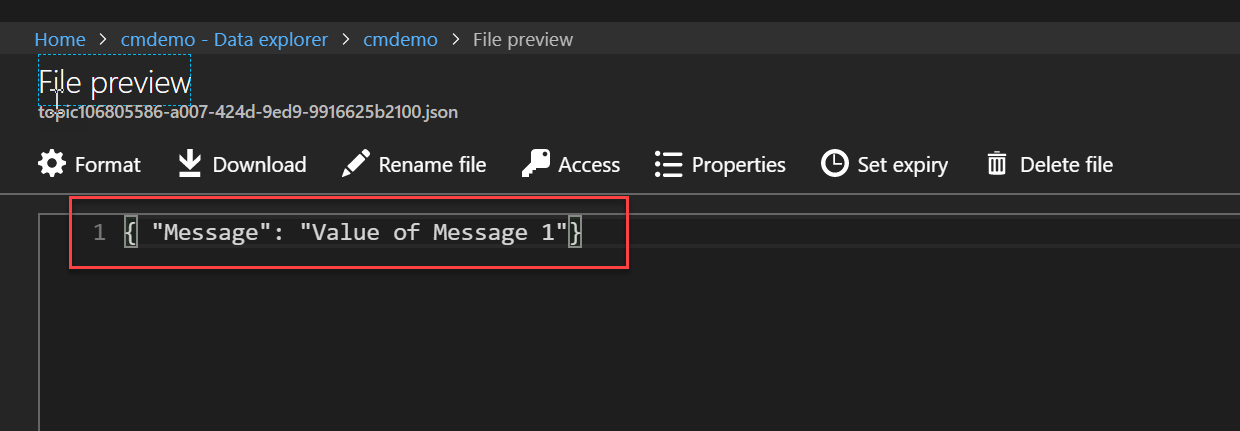

We can even use the File Preview feature in the Data Lake account to ensure that the data is in the correct format, i.e the original json.

I've attached a copy of the LogicApp ARM template below, if you're curious to see the actual ARM representation. This is what we would check-in source control in a proper CI/CD environment

Summary

I hope that you can see, based on this post, how easy it is to integrate services (Microsoft and beyond) using Azure Serverless. LogicApps come with over 190 connectors out of the box and new ones are added all the time. What problems will you solve using Azure Serverless, now that you know the possibilities?