I'm currently working on a side project as an excuse to try some of the latest code features and functionality in [.NET Core](https://www.microsoft.com/net/download/core" target="_blank). It's a small project with a couple of models and basic CRUD. The project is going to touch everything new such as ASP.NET Core 2.0 Preview, .NET Standard 2.0 Preview, [Azure Storage SDK for .NET Core](https://www.nuget.org/packages/WindowsAzure.Storage" target="_blank)etc. It was a conscious decision as I wanted to see how the latest tooling and libraries would work. The migration from MVC 5 -> ASP.NET Core project was mainly painless with a few small changes, but remember that this was a small project with a handful of controllers and minimal data models.

I have to say that working with .NET Core is an absolute joy, especially inside VS 2017 Preview (15.3), where the intellisence truly shines. Don't get me wrong, I tend to alternate between my Mac and Windows machines testing all 4 tooling options:

- CMD/Terminal

- Visual Studio 2017 Preview

- Visual Studio for Mac

- Visual Studio Code

But the intellisence I get in Razor views, tag helpers etc is unparalleled in VS2017. Depending on the work I do (server side, client side) I try to choose the best tool for the job :)

One thing I also wanted to do was to secure access to the Storage Account using SAS authentication. To take it a step further, instead of using a single SAS for all access, I decided to use a per-transaction SAS. Unfortunately, the docs were a bit outdated and it was hard to find the missing bits. There were plenty of examples on securing Blob and Container storage with SAS but nothing on Table Storage. So here's my attempt to capture what's the minimum required code to have a working SAS authentication solution for Storage Tables. My code samples target .NET Core but since I target .NET Standard, you could consume the code from anywhere (Full .NET, Core, Xamarin)

The code

First we need to create a console application that targets .NET Core. I'll go with the dotnet CLI for this one:

mkdir storagesasdemo && cd storagesasdemo

dotnet new console

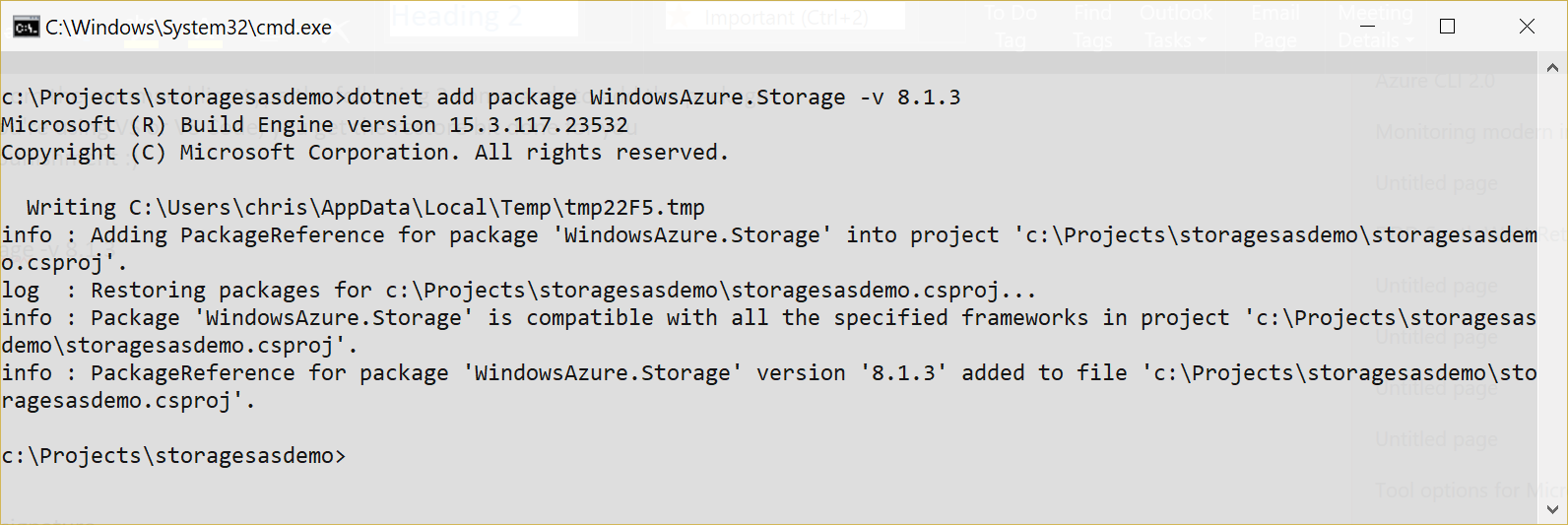

Add the Azure Storage NuGet package. From the command-line type the following 2 commands to add the package and restore it (important). I use the CLI again because it's cool I'm a gluten for punishment :)

dotnet add package WindowsAzure.Storage -v 8.1.3

dotnet restore

The outcome should look like this:

Alternatively, you can use the NuGet package manager in VS or edit the *.csproj file directly and add the following lines:

<ItemGroup>

<PackageReference Include="WindowsAzure.Storage" Version="8.1.3" />

</ItemGroup>

Next we need to add the code, which consists of 3 classes. I could have stuck everything in one file, but something, something, SOLID and good design etc.

- StorageService (to provide the CORS and SASPolicy along with a wrapper around the table client

- StudentRepository(for the CRUD)

- Student (The TableEntity, i.e our model)

Let's look how each file should look like here:

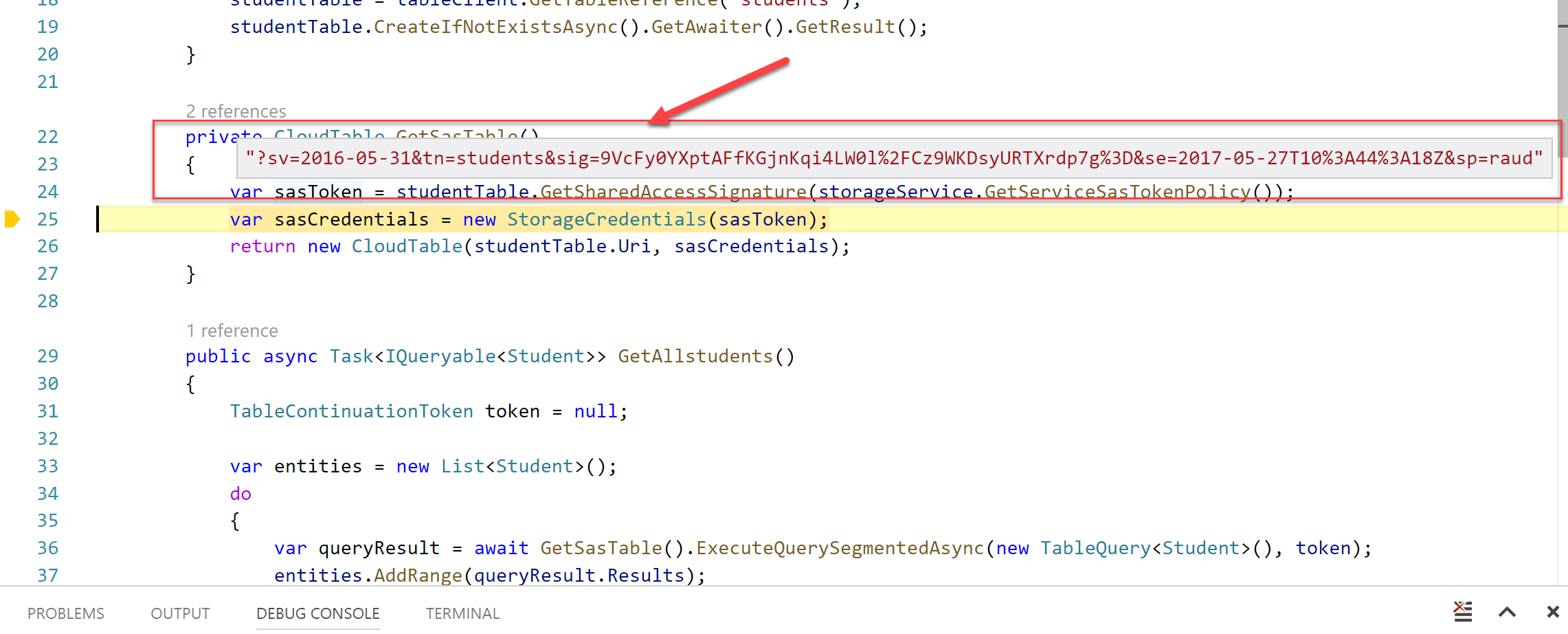

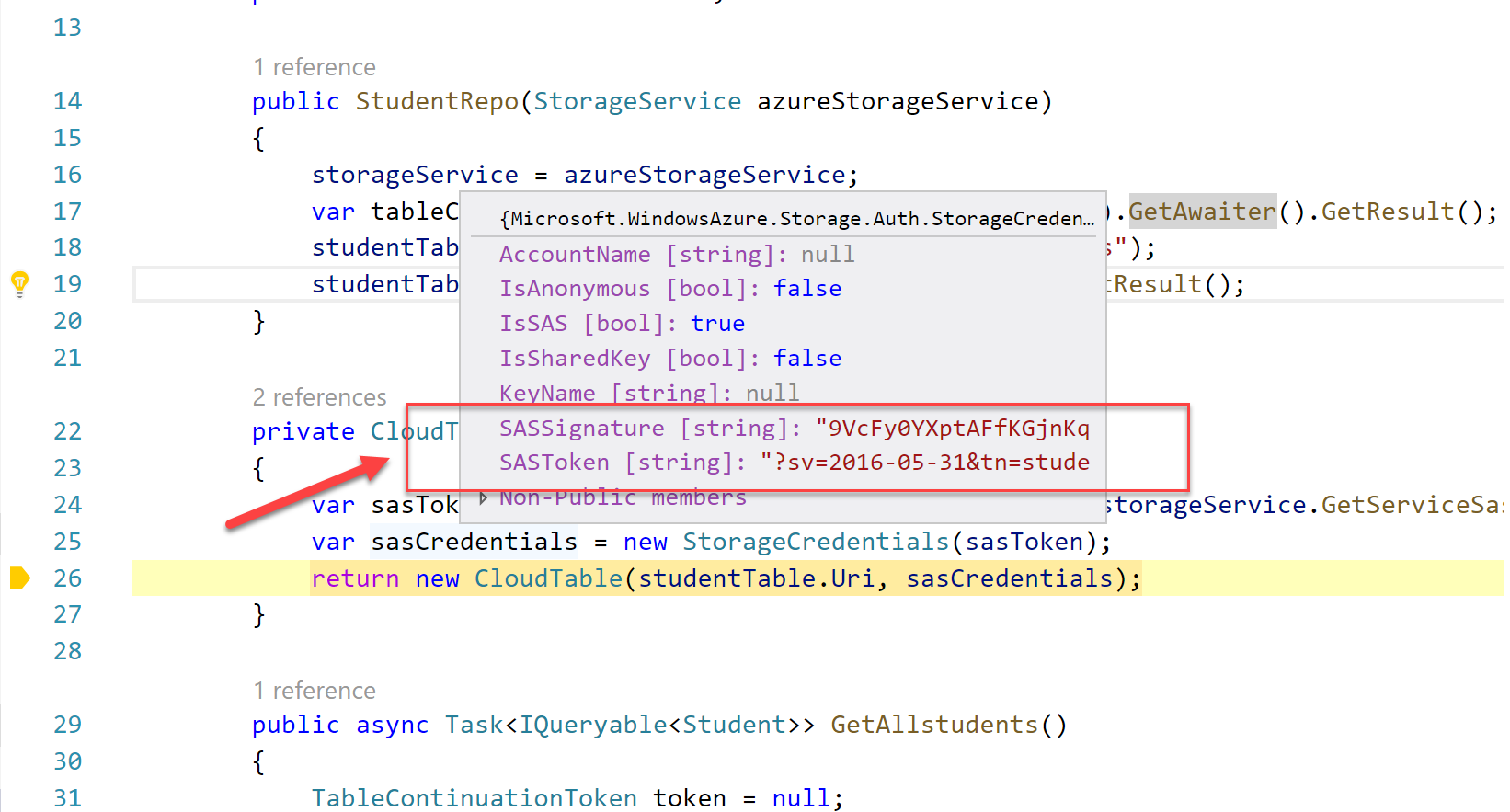

All the "magic" happens inside the GetSASTable() that pulls the necessary information to create an SAS signature and get the right reference to it so that we can execute our queries on the storage account. You can also be a lot more specific and define different SAS policies per operation (Insert, Read, Delete) but for me this one policy is enough.

In case you're wondering, for me the missing bit was getting the SASTable table reference. There are also 2 different ways to implement this.

Option 1

// SAS via CloudTable constructor Uri + Client

var sasToken = studentTable.GetSharedAccessSignature(storageService.GetServiceSasTokenPolicy());

StorageCredentials sasCreds = new StorageCredentials(sasToken);

CloudTable sasTable = new CloudTable(table.Uri, tableClient.Credentials);

Option 2

// create and use the URI directly

var sasToken = studentTable.GetSharedAccessSignature(storageService.GetServiceSasTokenPolicy());

string fullUriWithSAS = table.Uri.ToString() + sasToken;

CloudTable sasTable = new CloudTable(new Uri(fullUriWithSAS));

You'll also notice that we implement a [CORS](https://developer.mozilla.org/en-US/docs/Web/HTTP/Access_control_CORS" target="_blank) policy in ConfigureCors(ServiceProperties serviceProperties) with only the approved URLs. These URLs should change based on your project.

private void ConfigureCors(ServiceProperties serviceProperties)

{

serviceProperties.Cors = new CorsProperties();

serviceProperties.Cors.CorsRules.Add(new CorsRule()

{

AllowedHeaders = new List<string>() { "*" },

AllowedMethods = CorsHttpMethods.Get | CorsHttpMethods.Head,

AllowedOrigins = new List<string>() {

"http://localhost:5000",

"https://localhost:1659",

"https://www.azurenotes.tech/",

},

ExposedHeaders = new List<string> { "*" },

MaxAgeInSeconds = 1800

});

}

And putting it all together in the console application, we get the following code:

We can now run the application using the tool of choice. CMD with dotnet run or the debugger in VS/VS Code.

Prerequisites: to run on your local environment you need to install the [Azure Storage emulator](https://docs.microsoft.com/en-us/azure/storage/storage-use-emulator" target="_blank). Alternatively, you can run it against an active Azure Storage account. In this case, before you run the code, make sure you've changed the connection string to your Storage Account

Debugging the code with breakpoints at the critical point we get a glimpse on what happens when we call the GetSASTable() method:

I pause the debugger longer than the SAS token expiry time (in this particular example the expiry in the SAS policy is 2 mins), then I get an authentication error similar to the one below:

Unhandled Exception: Microsoft.WindowsAzure.Storage.StorageException: Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.

Conclusion

As you can see, it only takes a few extra lines of code to implement SAS authentication. It's important to use this approach because this is the recommended practice when interacting with Azure Storage. You should avoid at all costs using your Master or Secondary keys because these grant full access to the storage account, way above and beyond a specific container or table. Consequently, having those keys exposed could compromise your storage account. If you want a deeper dive into Azure Storage security, check this blog post I published recently:

[How to Secure Your Azure Storage Infrastructure](https://www.simple-talk.com/cloud/cloud-data/secure-azure-storage-infrastructure/" target="_blank)

Sample code on GitHub

You can also find a fully working solution on [GitHub](https://github.com/cmatskas/storageSASDemo" target="_blank), in case you want to see how I pulled everything together.